Need to understand a controversial human biology/genetics/psychology topic? You've come to the right place! That's because I know everything there is to know about everything and am uniquely endowed with the ability to perfectly describe EVERY science topic. What? Sarcasm? I don't even know what that is!

Friday, May 31, 2019

Moving to Medium

Tuesday, May 28, 2019

Relationship between poll quality and President Trump's (dis)approval rating

Methods: Trump presidential approval rating polling data was downloaded from this link (by clicking on the link labeled "presidential approval polls" at the bottom). Data were then analyzed to calculate average approval and disapproval ratings for polls with a) a specific letter grade from FiveThirtyEight, b) Rasmussen, and c) just all polls overall, to test for relationships of poll characteristics with the result.

Results: The average approval rating and disapproval rating across all polls was 42% and 54%, respectively. Letter grades for polls by agencies that had such grades assigned to them ranged from a minimum of D- to a maximum of A+. When grades were converted into a numerical score (D- = 1, then increasing by 1 more point for each letter-grade tier (D = 2, D+ = 3, C- = 4, etc.)), there was a strong negative correlation between letter grade and approval rating (r = -0.74), while the correlation between letter grade and disapproval rating proved to be very weakly positive (r = 0.06). There was also a fairly strong negative correlation between letter grade and net approval rating (the latter being % approval - % disapproval; r = -0.60). In addition, Rasmussen did indeed tend to produce higher average approval ratings for Trump than the average of all polls combined (47% vs. 42%), and it also produced lower average disapproval ratings (52% vs. 54%). Trump's average approval rating based only on the highest quality polls (A+ grade) is 41% and his disapproval rating based on such polls is 53%. Notably, this is also based on polls conducted during his entire presidency (so far); as such, it is unsurprising, but reassuring, that the average approval rating of 41% matches closely with the average of 40% estimated by Gallup.

Conclusions: I found strong evidence that higher quality polls tend to produce lower approval rating and net approval rating estimates, but no such effect was found for disapproval ratings, which tended not to systematically vary with FiveThirtyEight quality rating. On average, a 1-point increase in poll quality rating is associated with a 0.48% point decrease in Trump approval rating. Furthermore, I corroborated previous reports indicating that, on average, Rasmussen polls tend to lead to higher approval ratings for Trump (as well as lower disapproval ratings).

Thursday, May 23, 2019

Jerry Coyne's strategies on evolutionary biology

- Ad hominem fallacy: Researchers arguing for the EES and/or a significant role of epigenetic inheritance are biased because they are funded by the Templeton Foundation, whose underlying ideological bias against old-school neo-Darwinism presumably invalidates all research ever funded by them. E.g. "[Proponent of epigenetics Michael] Skinner is eating well from the Templeton trough. It’s pretty clear that Templeton is deeply invested in showing that the “conventional” view of evolution and genetics is wrong, for they’ve also put millions into other researchers to that end."

- More ad hominems include: researchers being biased to exaggerate their conclusions because they want media attention for their claims of a paradigm shift, papers pushing transgenerational epigenetic inheritance are only published because of stupid biased scientists who have an unshakeable belief in this (presumably nonexistent) process, the media and the public's affinity for epigenetics stories, etc.

- Epigenetics is still fundamentally under genetic (DNA) control, so DNA-centrism is still valid. E.g. "the position of and influences on a cell can cause it to acquire methylation marks that turn it into difference courses of development: a liver cell, a kidney cell, a bone cell, and so on. But these changes, all inherited among cells in a single body, have resulted from natural selection: they’re adaptive because having different kinds of cells and tissues is adaptive. What has happened is that the DNA program itself, within the egg, contains information that says “methylate cell X at genes Y and Z if it experiences condition C”, and so on."

- Epigenetic inheritance doesn't last long enough to cause long-term evolution: "...we have no examples of such acquired methylation lasting more than two or three generations, so there’s no evidence that it could serve as a stable basis of inheritance, much less of adaptation."

- Studies supposedly showing that such inheritance exists are "...more often than not flawed, relying on p-hacking, small sample sizes, and choosing covariates, like sex, until you get one that shows a significant effect".

Tuesday, May 21, 2019

A meta-analysis of Joe Biden's support in primary polls.

This post outlines the methods and results of a simple meta-analysis of the % support received by Joe Biden in national 2020 Democratic primary polls. Currently, the meta-analysis includes 125 polls, all national polls obtained from FiveThirtyEight's poll aggregator. The effect size is the % of the vote Biden gets in a given poll, and the sample size is just the number of people polled. These polls included a total of 324,920 people. The meta-analysis was carried out in keeping with the instructions outlined here.

Note that I have only ever added polls to this meta-analysis, with one exception: an open-ended ABC/WaPo poll that I removed because it had an unusually high percent of respondents (35%) who said they were undecided, which also resulted in all the candidates' percentages being significantly deflated.

Due to high heterogeneity (I2 = 94.8), a random-effects meta-analysis was performed, yielding an effect summary of 30.5% (95% CI 29.6% - 31.4%). No evidence was found that smaller-sample polls were biased in Biden's favor: on the contrary, there was a moderate positive correlation between sample size and % support for Biden (r = 0.36).

Monday, May 20, 2019

Candidate-specific swing maps from 2016

What about where Clinton got a higher % of the vote than 2012 Obama (same rules of course, but w/colors reversed regarding higher/lower)?

This underscores how the 2016 election was indeed a Clinton loss more than a Trump win. Clinton lost a lot more ground in more places than Obama compared to Trump improving on Romney.

Monday, March 18, 2019

On the representativeness of exit polls II: the 2018 gubernatorial elections

Kemp = (.52*.46)+(.49*.54) = 50.4%, so 0.2% high.

Abrams = (.46*.46)+(.51*.54) = 48.7%, so 0.1% low.

For age (4):

Kemp = 49.9%, so 0.3% low.

Abrams = 48.8%, so exactly right.

For age (6):

Kemp = 49.7%, so 0.5% low.

Abrams = 48.0%, so 0.8% low.

Using the Excel spreadsheet I just threw together it is easy to calculate estimates for any race if you have the exit poll results (% of voters in each group and voting results by group). If you do it for the FL governor's race (won 49.6%-49.2% by the Republican Ron DeSantis; CNN's exit poll is here), you get this:

Gender: DeSantis = 49.8% (0.2% high), Gillum 48.7% (0.5% low).

Age (4): DeSantis = 49.9% (0.3% high), Gillum 48.8% (0.4% low).

Age (6): DeSantis = 49.0% (0.2% low), Gillum 48.3% (0.9% low).

For this Age (6) poll, the probable reason the estimates for both are a bit low is that 4% of 30-39 year old voters responded "No Answer" when asked who they voted for. Was this because they voted for a third-party/independent candidate, or did they not vote (for governor at least) at all? Probably a combination of both, but some of them probably just didn't answer even though they actually voted for DeSantis or Gillum.

CA: Democrat Newsom won the governor's race in CA with 61.9% of the vote to 38.1% for Republican Cox. Notably, it seems like these were the only two candidates on the ballot because of CA's weird runoff system, so 100% of all votes were for one or the other. Because some exit poll results have at least 1% of respondents refusing to answer we have to make all percentages based on the % of respondents who did answer (100%-the % who didn't answer).

Using CNN's exit poll for that state yields these estimates for the results (all errors are +/- based on value of (estimated-actual)):

Gender: Newsom 60.9% (-1%), Cox 39.1% (+1%)

Age (4): Newsom 60.8% (-1.1%), Cox 39.2% (+1.1%)

Age (6): Newsom 60.7% (-1.2%), Cox 39.3% (+1.2%)

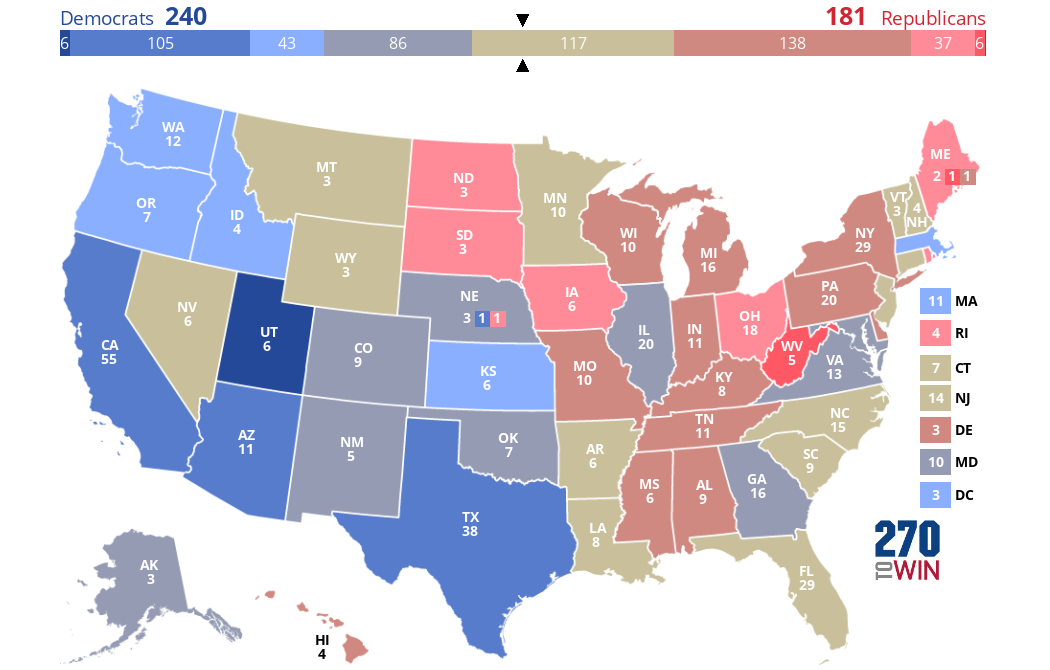

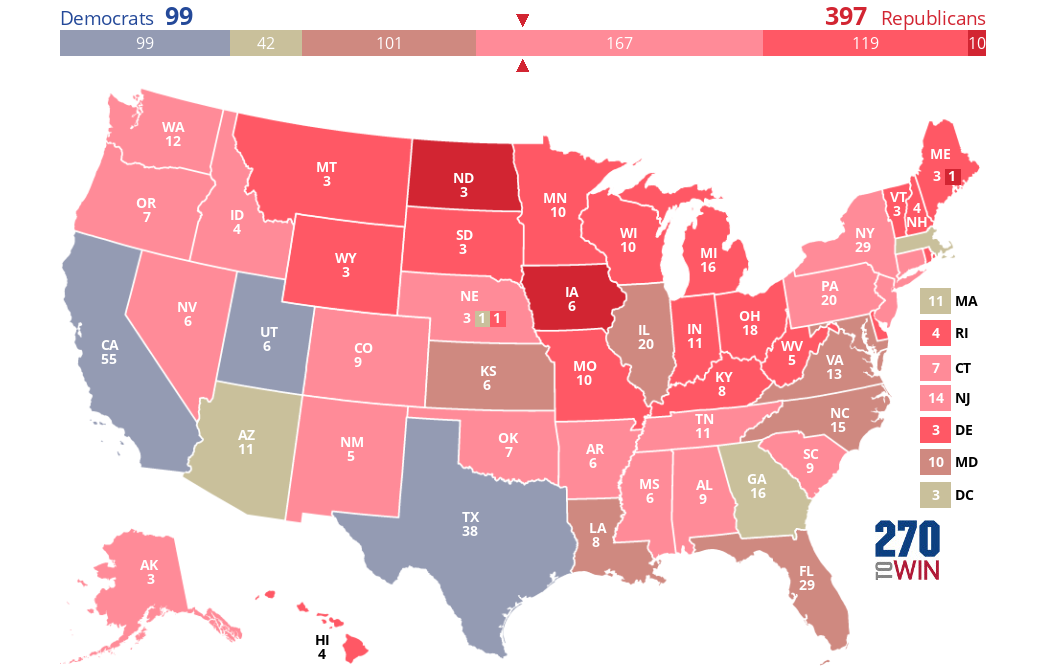

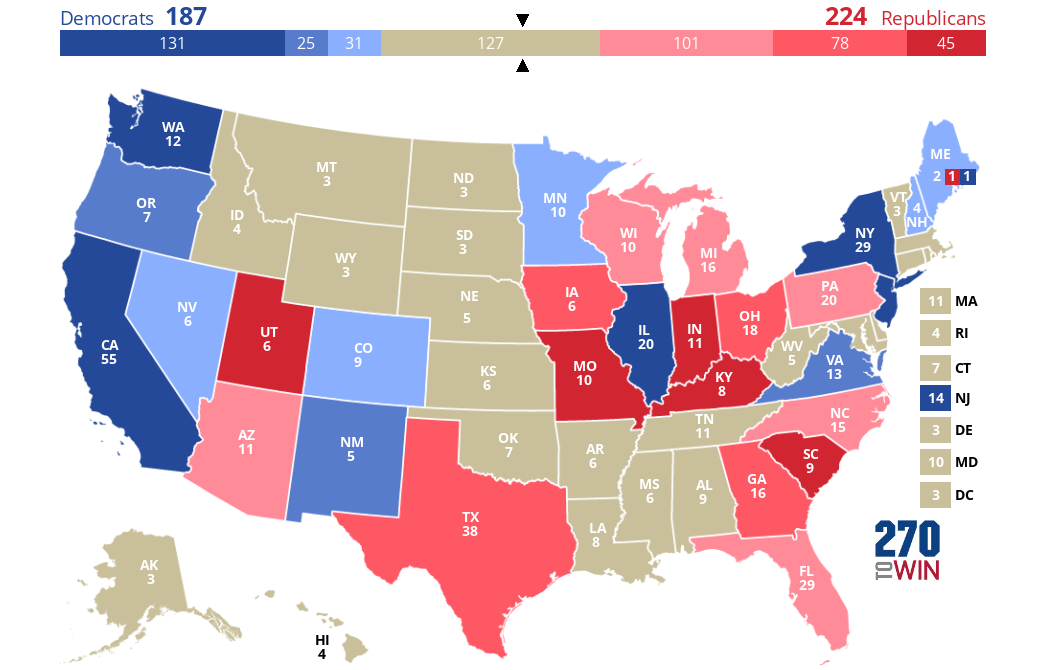

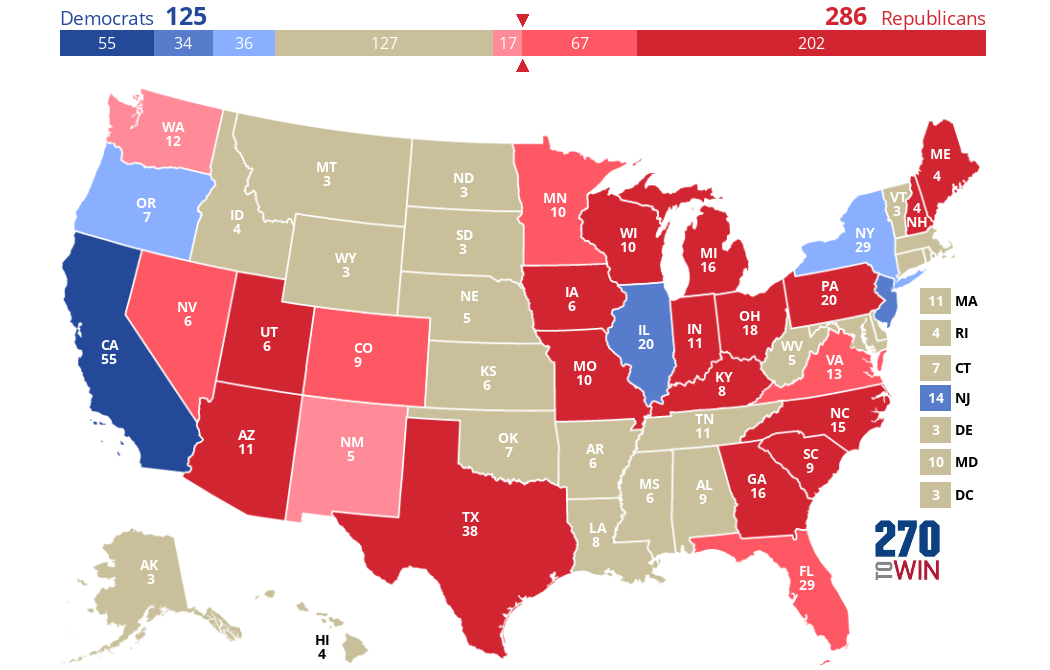

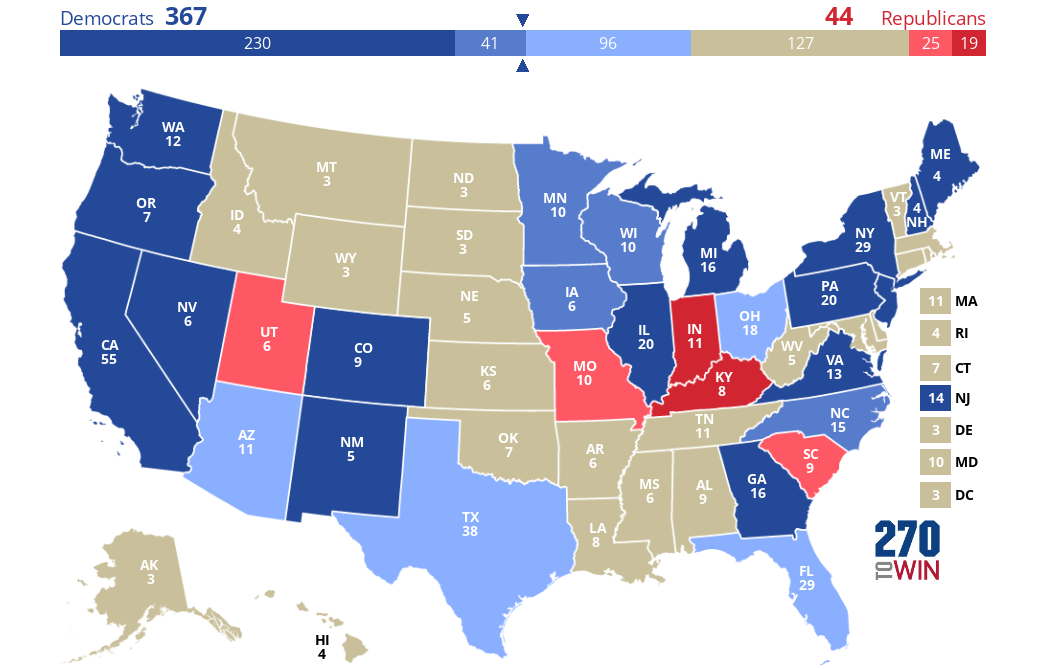

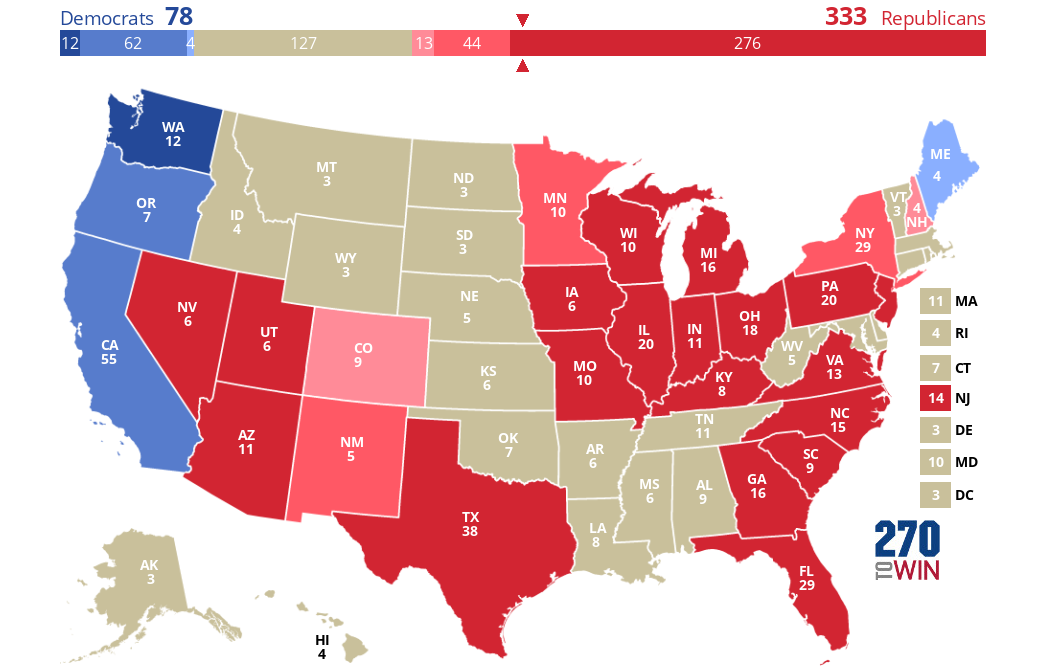

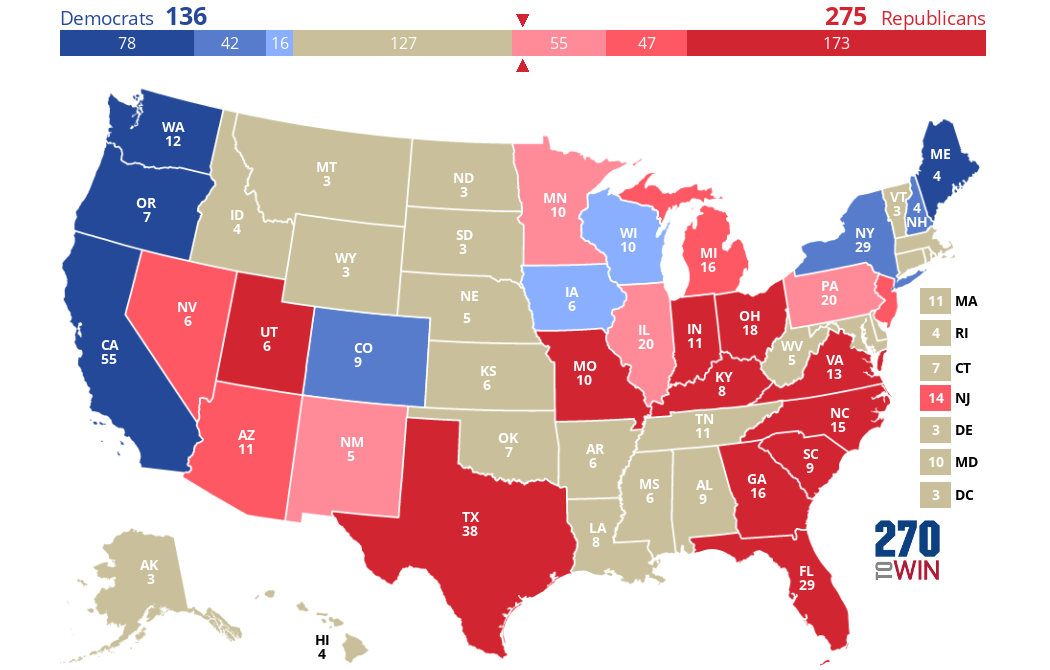

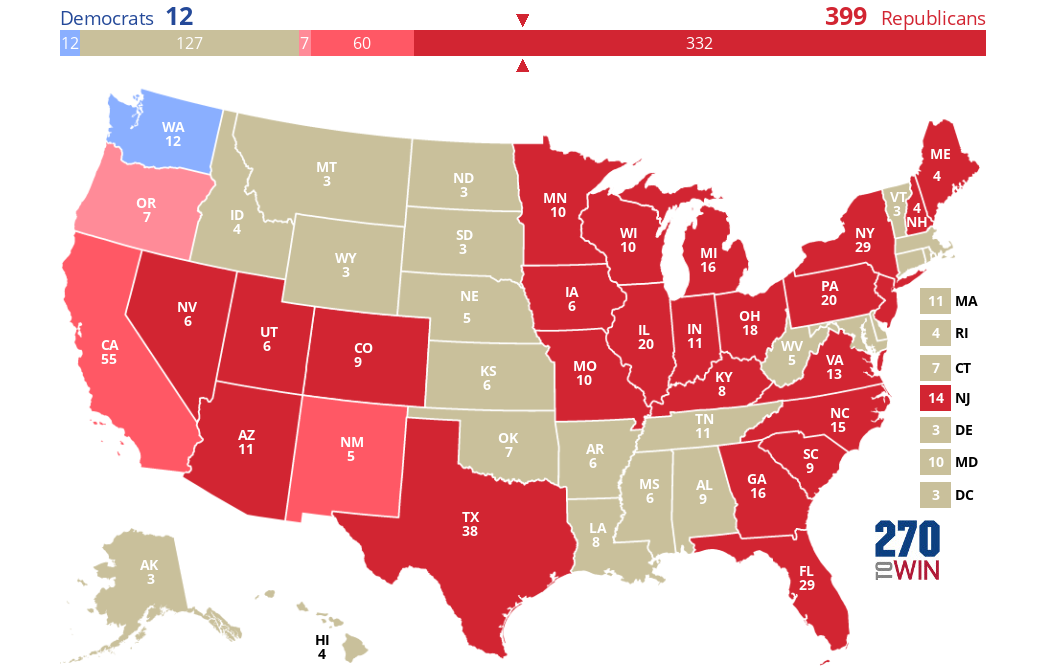

If only X could vote

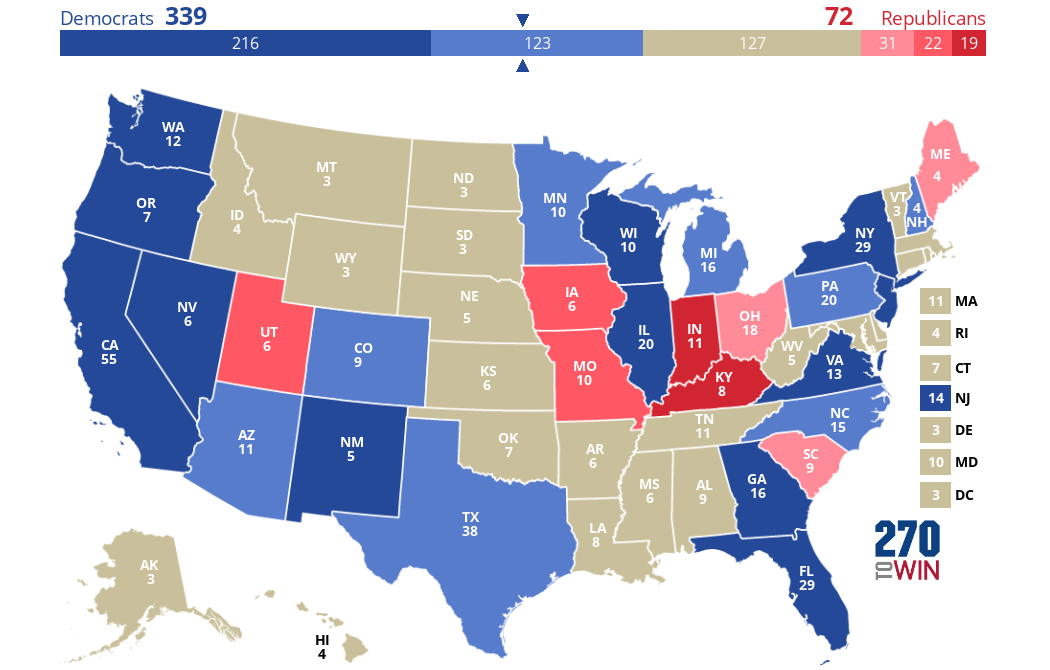

Let's start by taking the actual 2016 EV results (recall they were 306 Trump, 232 Clinton) and grey out all the states that CNN does not have exit polls at all for. As you can see plenty of states are grayed out--mostly ones Trump won in the Great Plains/Western area, as well as some Trump-loving southern states and a few liberal ones in the Northeast. The 2016 exit poll included 28 states, excluding 22 states and DC. In total, we see Trump with 224 EVs and Clinton with 187, so no one has enough to win here:

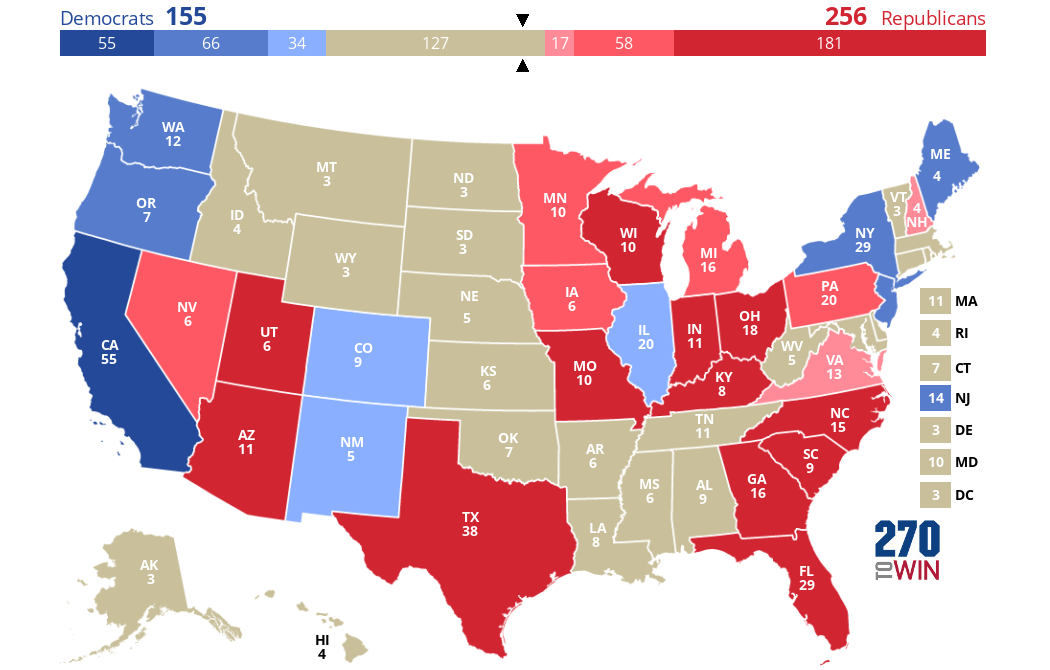

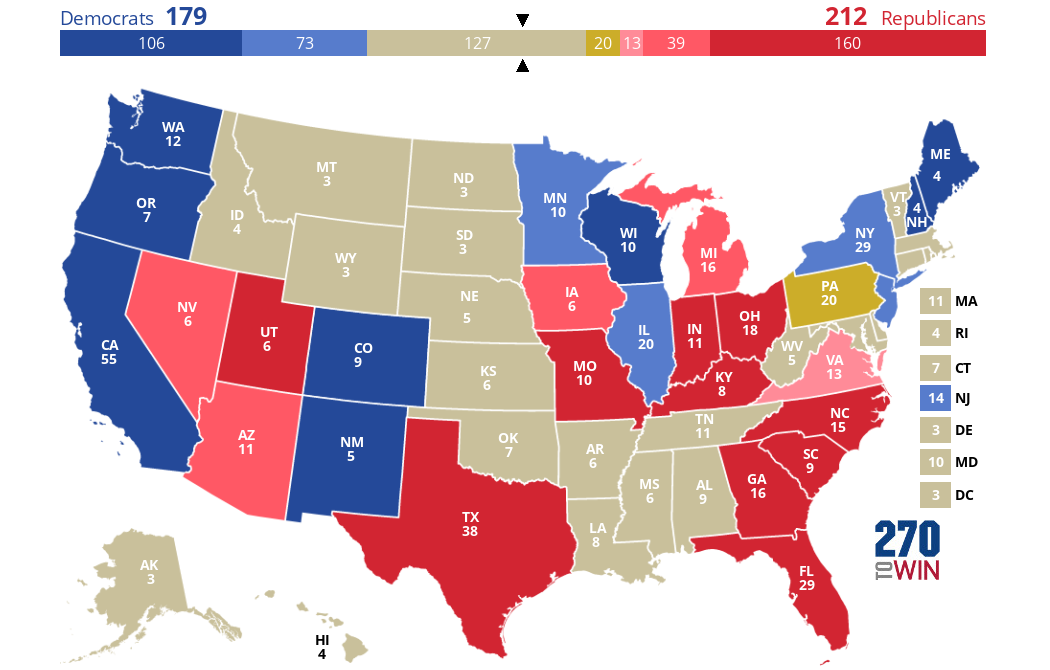

- White men

- White people overall

- Men overall

- White women

- People 45/older

- The American electorate overall

- White college graduates

- People under the age of 45

- Women overall

Monday, March 11, 2019

Why do hereditarians love the word "empirical" so much?

In this post I cite examples of hereditarian IQ/race/genetics researchers (Robert Plomin, Ian Deary, etc.) using appeals to "empirical" evidence to support their views. Their use of the word "empirical" specifically to defend themselves against their critics recurs weirdly often to the point where they seem to "get off" on using this word. And often their uses of it seem entirely redundant in the context in question. All emphases that follow are mine.

Example 1: "Concerning the equal environments assumption in general, empirical data based on most twin studies ever published point to little or no influence of shared environmental factors on twin similarity" (Arden et al. 2016).

Example 2: "A theoretical logic provides a useful framework for considering the empirically discovered links between intelligence and health. This framework is useful in generating empirical research questions such as ours" (Arden et al. 2016).

Example 3: [After describing E.G. Boring's 1923 quote "Intelligence is what the tests test"] "The apparently dismissive comment

came after a summary of strong empirical findings — for example, that the tests

showed marked individual differences, that the differences were stable over time, that

children developed greater intelligence over time but tended to maintain the same

rank order" (Deary et al. 2010, p. 202).

Example 4: "[Psychologist Howard] Gardner has intentionally avoided empirical tests of his theory [of multiple intelligences or MI], but those that have been made show most

of his MI to be correlated with one another...The theories that do not accommodate this finding [referring to the positive correlations between different mental tests] — such as those of Thurstone, Guilford, Sternberg

and Gardner — fail the most basic empirical tests" (Deary et al. 2010, p. 204).

Example 5: "More than 100 years of empirical research provide conclusive evidence that a general factor of intelligence (also known as g, general cognitive ability, mental ability and IQ (intelligence quotient)) exists, despite some claims to the contrary" (Deary et al. 2010, "Key points").

Example 6: "...we estimated the heritability of height from empirical genome-wide identity-by-descent sharing..." (Visscher et al. 2006)

Example 7: "...the empirical variance of IBD sharing is likely to be an underestimate because the marker information was not perfect" (Visscher et al. 2006).

Example 8: "They also failed to highlight that the theoretical discussion actually revolved around

an empirically testable question...To conduct the test, I drew on an empirical model..." (Littvay 2012)

Example 9: "The findings place the burden on critics to present theoretical work on the specific mechanisms of EEA violations based on which additional empirical assessments could (and should) be conducted." (Littvay 2012)

Example 10: "...these criticisms, like most of the literature questioning the validity of the EEA [equal environments assumption], are made on the basis of secondary analysis of published research, not on the basis of empirical examination of CTD [classical twin design] assumptions on political variables." (Smith et al. 2012, p. 19)

Sunday, March 3, 2019

On the representativeness of exit polls I: the 2016 general presidential election

I will start with my own state, Georgia. So what actually happened in this state was that Trump got 50.4% of the vote, while Clinton got 45.3%. So if we look at the gender exit polls for Georgia, we see the following:

- 55% of voters were female, 45% were male.

- Of the female voters, 54% voted for Clinton, while 43% chose Trump.

- Conversely, of the male voters, 60% chose Trump while only 37% chose Clinton.

- P(M) = 0.45,

- P(C|M) = 0.37,

- P(T|M) = 0.6,

- P(F) = 0.55,

- P(C|F) = 0.54, and

- P(T|F) = 0.43.

| Georgia | C | T | How far off (Clinton)? | How far off (Trump)? |

| Actual | 45.3% | 50.4% | ||

| Sex | 46.4% | 50.7% | 1.1% | 0.3% |

| Race (2) | 45.8% | 50.6% | 0.5% | 0.2% |

| Age (2) | 45.8% | 50.8% | 0.5% | 0.4% |

| Age (6) | 46.0% | 50.7% | 0.7% | 0.3% |

| Age (4) | 46.2% | 50.6% | 0.9% | 0.2% |

| Race (5)* | 42.0% | 48.8% | -3.3% | -1.6% |

| Race & gender | 43.8% | 50.3% | -1.5% | -0.2% |

| Arizona | C | T | How far off (Clinton)? | How far off (Trump)? |

| Actual | 44.6% | 48.1% | ||

| Sex | 44.6% | 48.9% | 0.0% | 0.8% |

| Race (2) | 44.8% | 48.5% | 0.2% | 0.4% |

| Age (2) | 44.7% | 48.9% | 0.1% | 0.8% |

| Age (6)* | 37.6% | 44.3% | -7.0% | -3.8% |

| Age (4) | 44.4% | 48.5% | -0.2% | 0.4% |

| Race (5)* | 39.2% | 45.2% | -5.4% | -3.0% |

| Race & gender* | 39.7% | 44.6% | -4.9% | -3.5% |

| Education (4) | 44.7% | 48.8% | 0.1% | 0.7% |

| Education (2) | 44.5% | 48.5% | -0.1% | 0.4% |

Lastly, I included California exit poll results (also from CNN) because it is the most-populated state, so surely they should be especially accurate there.

My results for the entire country, as well as for AZ, CA, and GA, are shown below. Note that these results include only the MOV as estimated from each exit poll category (sex, race (2), etc.), not the % estimated for either candidate. Overall we see that the exit polls seem to be very representative. We see that excluding missing data (corresponding to all values shown in red below) makes both the AZ and CA exit polls more accurate, but it has no effect for the national polls for the simple reason that missing data was nonexistent for these polls, and for GA this exclusion actually made the estimated MOV less accurate.

Lastly, CNN's national exit poll matched the actual results much more closely than did the Times'. Why? CNN's national poll was based on 24,558 respondents, while the Times' was apparently based on 24,537. It seems unlikely that those 21 extra voters made such a big difference in accuracy between the two polls. Additionally, at the bottom of the page for the Times' poll, it says: "Data for 2016 were collected by Edison Research for the National Election Pool, a consortium of ABC News, The Associated Press, CBSNews, CNN, Fox News and NBC News." This seems to imply that the source for CNN's and the Times' exit poll data is actually exactly the same. Why the results are slightly different, then, is not clear (e.g. CNN says Trump got 52% of the male vote, Times says 53%).

| MOV | AZ | CA | GA | National (NYT) | National (CNN) |

| Sex | -4.3% | 28.7% | -4.3% | 0.5% | 1.7% |

| Race (2) | -3.8% | 29.4% | -4.8% | 0.0% | 1.2% |

| Age (2) | -4.3% | 29.7% | -5.0% | 0.0% | 1.7% |

| Age (6) | -6.7% | 29.6% | -4.7% | 0.0% | 1.6% |

| Age (4) | -4.1% | 29.5% | -4.5% | 0.6% | 1.8% |

| Race (5) | -6.0% | 28.1% | -6.8% | 0.9% | 1.8% |

| Race & gender | -4.9% | 24.7% | -6.4% | 0.0% | 1.9% |

| Education (4) | -4.1% | 28.2% | -4.5% | 1.1% | 1.9% |

| Education (2) | -3.9% | 28.6% | -5.0% | 0.0% | 1.5% |

| Average | -4.7% | 28.5% | -5.1% | 0.3% | 1.7% |

| Average (excl. miss.) | -4.1% | 29.1% | -4.7% | 0.3% | 1.7% |

| Actual MOV | -3.5% | 30.0% | -5.1% | 2.1% | 2.1% |

Tuesday, February 12, 2019

Emil Kirkegaard's self-citations

| Total cites | Self-cites | |

| Top 6 | 164 | 112 |

| 7 | 13 | 12 |

| 8 | 11 | 8 |

| 9 | 11 | 10 |

| 10 | 10 | 2 |

| 11 | 10 | 10 |

| 12 | 10 | 10 |

| 13 | 9 | 8 |

| 14 | 9 | 9 |

| 15 | 9 | 7 |

| 16 | 9 | 6 |

| 17 | 8 | 7 |

| 18 | 8 | 8 |

| 19 | 7 | 7 |

| 20 | 7 | 5 |

| 21 | 6 | 3 |

| 22 | 6 | 5 |

| 23 | 6 | 6 |

| 24 | 6 | 6 |

| 25 | 6 | 6 |

| 26 | 6 | 6 |

| 27 | 5 | 4 |

| 28 | 5 | 5 |

| 29 | 4 | 4 |

| 30 | 4 | 3 |

| 31 | 4 | 4 |

| 32 | 4 | 4 |

| 33 | 4 | 4 |

| 34 | 4 | 4 |

| 35 | 4 | 4 |

| 36 | 4 | 4 |

| 37 | 3 | 3 |

| 38 | 3 | 3 |

| 39 | 3 | 1 |

| 40 | 2 | 2 |

| 41 | 2 | 2 |

| 42 | 2 | 2 |

| 43 | 2 | 1 |

| 44 | 2 | 0 |

| 45 | 1 | 1 |

| 46 | 1 | 1 |

| 47 | 1 | 1 |

| 48 | 1 | 1 |

| 49 | 1 | 1 |

| 50 | 1 | 0 |

| 51 | 1 | 0 |

| 52 | 1 | 1 |

| 53 | 1 | 0 |

| 54 | 1 | 1 |

| 55 | 1 | 1 |

| 56 | 1 | 1 |

Wednesday, February 6, 2019

The "Trump economy": fact or fiction?

The right-wing hashtag #jobsnotmobs, which was widely shared on Twitter during the elections last year, seems to suggest that Trump's policies have benefited American workers by reducing the unemployment rate. This claim can be tested empirically using the "interrupted time series" design. This method involves looking at trends before an intervention, then comparing them to the trends after an intervention, to see what effect (if any) the intervention had on the outcome being measured.

Monthly national unemployment data were downloaded from the Bureau of Labor Statistics website. The pre-Trump trend in the unemployment rate, because Obama is typically credited by Trump's critics for the economic improvements that have taken place since Trump took office, will be defined based on the start and end of the Obama administration. For this part, I will be generous to Trump and give him credit for all economic trends that happened from Nov. 2016 onward, even though he didn't take office until January 2017 and Nov. 2016 includes a week before Trump won the election. This yields two possible definitions of the "pre-Trump" period:

- Jan. 2009 - Oct. 2016 (inclusive)

- Feb. 2009 - Oct. 2016 (inclusive)

Wednesday, January 30, 2019

How do behavior geneticists respond to epigenetics?

The first answer to the above question is that BG is fundamentally focused on DNA as though it was the only way that human traits could be biologically transmitted across generations. But Charney (2012a) has noted:

"DNA can no longer be considered the sole agent of inheritance...The epigenome, that is, the complex biochemical system that regulates DNA expression, turning genes on and off and modulating their “transcribability,” has been found to be heritable, both somatically and intergenerationally via the germline, enabling the biological inheritance of traits with no changes to the DNA sequence. Furthermore, the epigenome is highly environmentally responsive. Environmentally induced changes in gene transcribability can have long-term – sometimes lifelong – phenotypic consequences."

So what does this all mean? It means that you can't just focus on the DNA, you must also focus on epigenetic processes as means of transmitting biological "information" through generations. Right? And it also looks like epigenetic processes can both alter DNA expression and be changed by environmental factors. This latter phenomenon, if it is true, means changes in the environment could indirectly led to changes in expression of DNA, meaning that the environment can affect genes. Surely this means that it is meaningless to refer to "genes" vs. "environment" as separate when this relationship demonstrates that they are anything but, with changes in the latter capable of changing expression of the former?

Maybe not: one common tactic of BG researchers when confronted with the supposed existence of transgenerational epigenetic inheritance is to say "nuh-uh!". More precisely they tend to argue that the evidence for epigenetic inheritance allowing traits to be inherited without DNA sequence changes is weak or nonexistent. For example, Barnes et al. (2014) claim,

"...epigeneticists are urging social scientists to be more cautious when discussing epigenetic influences on social behavior. In the words of two preeminent epigeneticists, Heijmans and Mill (2012: 4): “[E]pigenetics will not be able to deliver the miracles it is sometimes claimed it will.” Perhaps unknown to sociologists who have hung their future of the field on epigenetics, epigeneticists are confronted with the same problems genomic and biosocial scientists are encountering."

Similarly, Battaglia (2012) noted, "the relationship between widespread epigenetic marks and genetic expression is still controversial." This particular paper was also cited by Liu & Neiderhiser (2017, p. 100), who also make a very similar point in saying (p. 101), "findings from epigenetic studies are still controversial and inconsistent, especially in humans".

Let's return to Liu & Neiderhiser (2017), a book chapter that goes into more detail than anything else I've seen in "debunking" conceptual criticisms of BG. This same chapter states (pp. 100-101): "...proponents of an epigenetic approach would expect that the environ mental and behavioral inputs over time would contribute to increasing variation in gene expression, thus decreasing the phenotypic concordance among MZ and DZ twin pairs. However, this prediction is not consistent with the finding that for intelligence, the degree of similarity among MZ twin pairs increases throughout the life span and the heritability of intelligence continues to increase linearly with age". This argument is also made by Battaglia (2012). Charney (2012b) responds to Battaglia by saying the following:

"Both Battaglia and MacDonald & LaFreniere argue against the claim that MZ twins become more epigenetically and genetically discordant over their lifetimes by citing studies that purport to show that heritability increases with age. I am not sure if their claim is that these studies demonstrate that epigenetic discordances of MZ twins do not, in fact, increase over time, or that, although they may increase over time, they have no effect upon phenotypes. If the former, then clearly the results of a twin study cannot refute the existence of increasing epigenetic discordance, a phenomenon that has been repeatedly demonstrated by advanced molecular techniques (Ballestar 2009; Fraga et al. 2005; Kaminsky et al. 2009; Kato et al. 2005; Martin 2005; Mill et al. 2006; Ollikainen et al. 2010; Petronis et al. 2003; Poulsen et al. 2007; Rosa et al. 2008). To deny this would require a refutation of these studies. So, I take the argument to be the latter, namely, that studies that purport to show that heritability increases with age demonstrate that whatever epigenetic (and genetic) changes MZ twins experience over their lifetimes have no effect upon, for example, cognitive development.

More criticisms of the argument that epigenetics has significant effects that are relevant to BG are made by Moffitt & Beckley (2015). First, they claim, "...methylation is ubiquitous and normative, and usually it has nothing to do with experience but is part of organism development that is, incidentally, under genetic control. Because the genome is identical in each of our cells, during normal development, most of our genes must be methylated, lest a kidney cell grow into an eye or a fingernail cell grow into an ear. Against this normative background, methylation marks that can be statistically associated with external experience are relatively rare, and effect sizes are expected to be small." They also have six more criticisms of epigenetics-centered human behavioral research, but criticisms 2-4 inclusive are all technical and centered around whether such research is feasible, not whether there is strong evidence for the reality of epigenetic effects on DNA expression and thus on behavior.

Before moving onto Moffitt & Beckley's last 2 objections, I will note something about the first sentence in the quote I cited in the above paragraph. They claim that methylation is part of the development of the organism, and that (this is crucial) this development is "under genetic control". Really? In any case, DNA methylation is only one kind of epigenetic process: there are also other processes like histone acetylation, "histone methylation, phosphorylation, or ubiquitination, to name a few" (Moore 2017). Thus, it is weird that Moffitt & Beckley devote 100% of their epigenetic-related attention in their article to DNA methylation. Moreover, they are claiming that environmental factors do not significantly affect DNA methylation and that this process does not significantly vary from a baseline level, but they do not cite any sources to support this claim.

What is objection 5? Here's an excerpt: "...although a small set of nonhuman studies provide initial evidence that experience can apparently alter methylation, it is far from clear that the detected methylation alterations have any consequences for health or behavior. Before methylation can affect health or behavior, it must alter expression of genes. Links from methylation data forward to gene expression data are not yet known." This is also an argument from Battaglia (2012). Charney (2012b) responded to Battaglia's argument that there is not very much evidence that epigenetics affects human behavior by saying,

According to Battaglia, though epigenetic effects are potentially important, the individual and specific impact on brain and behavior is neither well understood nor unambiguously linked to gene expression data. In support of this assertion, he mentions a study by Zhou et al. (2011). Whatever Battaglia's precise intent in mentioning this study, their conclusion unambiguously links epigenetic changes to changes in gene expression and behavior:

There are also some baseless arguments-by-assertion merely claiming, with no supporting evidence, that epigenetic processes and their ability to mediate environmental effects on gene expression do not pose a fatal problem for BG studies. Here's an example from a response of some BG researchers to critiques (McGue et al. 2005): "Although the study of epigenetic phenomena may provide a powerful paradigm for developmental psychology, it will not obviate the need for twin, adoption, and family research like that reflected in our article (McGue et al., 2005)." This strange and totally unsupported argument has made its way elsewhere: Liu & Neiderhiser (2017, p. 100) cite McGue et al. (2005) and make the same exact claim with no additional support.

Other fallacies that rear their heads in the responses-to-epigenetics literature include the good-old-fashioned straw man. For example, you could construct a straw man version of epigenetics and developmental systems theory, and the associated schools of thought, according to which proponents of these ideas believe that DNA is totally irrelevant to human behavior. Of course, like any good straw man, this is a totally inaccurate representation of these people's views, but that doesn't stop Liu & Neiderhiser (2017, p. 100) from informing us that "DNA sequence variations are important and will continue to be important." Duh! Who are these imaginary epigenetics proponents claiming that DNA should be ignored entirely? They don't exist! If you wanna know what these individuals actually believe, maybe read some of their work, such as a recent paper by Overton & Lerner (2017, p. 117):

- "the burgeoning and convincing literature of epigenetics means that genetic function is a relatively plastic outcome of mutually influential relations among genes and the multiple levels of the context within which they are embedded (cellular and extracellular physiological processes, psychological functioning, and the physical, social, and cultural features of the changing ecology [e.g., Cole, 2014; Slavich & Cole, 2013])."

But BG researchers always have one more consistent "out" when confronted with epigenetics: claim that it can be incorporated into BG research. This is one of the most common answers to the question that forms the title of this post. Liu & Neiderhiser (2017), for instance, after casting doubt on the importance and DNA-independence of human epigenetics for almost two full paragraphs, tell us (p. 101), "In sum, we wish to emphasize that family-based behavioral genetic approaches are a promising way to study complex epigenetic effects and gene expression." This message seems oddly inconsistent with what they said in the previous dozen sentences or so, but OK. Moffitt & Beckley make a similar but distinct suggestion: that twin studies be used not to estimate heritability (their most common purpose historically), but to rule out potential confounding factors and biases:

"Dizygotic twins are ideal for testing what factors explain behavioral differences between siblings who are matched for age, sex, ethnic background, and most early rearing experiences. Discordant monozygotic twins are ideal for studying environmentally induced variation in the behavior of siblings matched even further, for genotype (Moffitt, 2005a)...The current recommendation from the experts is, if you plan to study human epigenetics, then at least use twins." (Moffitt & Beckley 2015)I admit, that last sentence made me chuckle (it's clearly a reference to the common cliche about at least using a condom if you plan to have sex). Note that in making this recommendation, Moffitt & Beckley do not argue that classical twin studies or heritability estimation are scientifically valid or worthwhile; instead they try to argue that twin studies should be used for a totally different purpose.